What does the term severity mean, in the context of incidents involving software systems?

Merriam-Webster gives us this:

“the quality or state of being severe: the condition of being very bad, serious, unpleasant, or harsh.”

Here are a few colloquial definitions:

“Severity measures the effort and expense required by the service provider to manage and resolve an event or incident.” – (link)

“…the severity of an error indicates how serious an issue is depending on the negative impact it will have on users and the overall quality of an application or website.” – (link)

“SEV levels are designed to be simple for everyone to quickly understand the amount of urgency required in a situation.” – (link)

For the ITIL-leaning folks, here is “major incident”:

“Major Incidents cause serious interruptions of business activities and must be solved with greater urgency.” – (link)

As we study different organizations across the industry, we’ve asked people questions about what “severity levels” mean to them, including:

- what the purpose of assigning severity level is

- what/when/how these levels are defined – and how broad/general they are worded

- how this labeling activity after the incident (in hindsight) differs from what incident responders say during the incident and how that assessment might change as the incident evolves and unfolds

The conclusion that we’ve come to is that the term “severity” has many different meanings and purposes (in the same way post-incident reviews are) when it is used to categorize incidents. In addition, these meanings and purposes can vary even in the same organization.

It would make sense that definitions of “severity level” would vary from company to company; every business is different. What is deemed important to one business may not be important to another. Therefore, we can safely say that there can’t be a One Severity Level Schema To Rule Them All™ – these schemas (ideally) will be designed to be contextual for that organization.

For example, here is how I defined severity levels at Etsy many years ago (I can imagine that these may have changed since I left):

| Level | Description |

| 1 | Complete outage or degradation so severe that core functionality is unusable |

| 2 | Functional degradation for a subset of members or loss of some core functionality for all members |

| 3 | Noticeable degradation or loss of minor functionality |

| 4 | No member-visible impact; loss of redundancy or capacity |

| 5 | Anything worth mentioning not in the above levels |

More often than not, “severity level” is given as a way of categorizing the impact an incident has had on the customers of the business.

But should there always be a 1:1 mapping with severity with customer impact?

Severity as urgency or escalation signal

In some cases, the concept of severity level is used by people responding to an incident as a way to describe their current assessment of the event to relay to others. An engineer may also use severity level as a way to assess whether to call others for help; we have seen this even when an engineer can’t truly justify the severity level they’re declaring at the time. The stance, in this case, is that it is better to assert a higher severity level and later found to be mistaken than the other way around.

They might also use this categorization as a way to communicate via shorthand with non-engineering staff (such as customer support or public relations or legal, etc.) without having to translate specific technical jargon for them. Some organizations use severity level as criteria to kick off internal actions or procedures. For example, a Customer Support group might take some actions if an incident is labeled a “sev 2” or above.

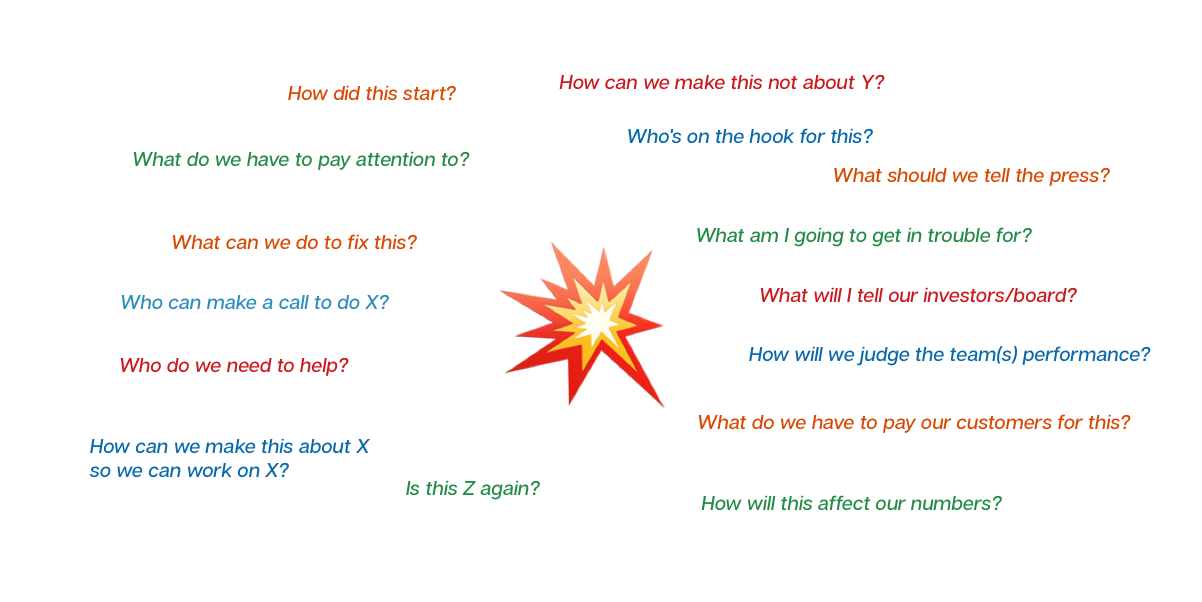

In any case, making an assessment of an incident’s severity level during an event is not always straightforward. Various guidance is given around rules-of-thumb with respect to this:

“If you are unsure which level an incident is (e.g. not sure if SEV-2 or SEV-1), treat it as the higher one. During an incident is not the time to discuss or litigate severities, just assume the highest and review during a post-mortem.” (link)

This guidance seems reasonable – time is of the essence! However, in practice, we see the beginning of many incidents fraught with uncertainty. The question “…ok is this now an actual incident?” has appeared in many events we have studied, and when we ask about this we hear that even declaring that a given event is an actual “incident” is not always straightforward, let alone the severity level of the event.

We’ve also observed at the beginning of almost any incident, it’s often uncertain or ambiguous to responders whether it represents a “rough Tuesday afternoon” or a “viability-crushing” event. These characterizations can only be made in hindsight.

When we have seen responders declare the severity level at the beginning of an event, it is for the most part because the “severity-defining” elements of the incident are clear.

In almost every group we have studied, engineers with experience in responding to incidents have reflected that their sense of how “severe” an incident is can sometimes fluctuate wildly as the actual event unfolds.

Severity as post-incident task or hiring budget priority

Severity levels are also used by some organizations to assign priority to follow-up “remediation tasks” associated with the particular incident. The higher the severity level, the greater the priority is on the ticket/task. The effects of this priority-setting can vary; in some cases, the priority dictates the “due date” of the task. In other cases, it simply allows people to sequence or order which tasks get worked on before others.

Likewise, incidents that carry with them significant “levels” of severity can be very useful for an engineering manager who is looking to expand their team but without incidents cannot seem to be granted the headcount budget.

Seen in this light, “severity” could be seen as a currency that product owners and/or hiring managers could use to ‘pay’ for attention.

Severity as contractual customer obligation

In some organizations, the severity level of an incident can also dictate specific actions to be taken as part of a written contract or agreement with a customer. Service Level Agreements (SLAs) may be written such that “sev 3” and below (for example) incident do not require any sort of refund or credit towards future fees; sometimes schedules are frequently attached for severity levels above this value.

In this case, severity level definitions are not only cast into writing, but they also represent legal obligations. Like many legal constructs, we tend to see these written to be less crisp and clear — with more space for open interpretation.

Seen in this light, “severity” could be seen as an economic artifact.

Severity as trigger for post-incident analysis and review

In some organizations, the decision to do a post-incident review or analysis (sometimes called “postmortems”) can hinge specifically on the severity level. The implication in this is that an event’s potential to reveal insights about the system(s) is gauged on a singular numeric scale, not features or qualities of the incident. This does become further problematic in cases where severity levels are explicitly proportional to “customer impact.” This yields the logic that if a customer was affected, learning about the incident is worth the effort, and if no customers experienced negative consequences for the incident, then there must not be much to learn from it.

Is this reasonable? If we follow this line of reasoning a bit further, it contrasts with the idea that dynamics in software can be existing yet latent in a system until particular conditions reveal (or ‘trigger’) its presence. In Dr. Richard Cook’s seminal article How Complex Systems Fail (Cook, 1999) this is described thusly:

“Complex systems contain changing mixtures of failures latent within them.

The complexity of these systems makes it impossible for them to run without multiple flaws being present. Because these are individually insufficient to cause failure they are regarded as minor factors during operations. Eradication of all latent failures is limited primarily by economic cost but also because it is difficult before the fact to see how such failures might contribute to an accident. The failures change constantly because of changing technology, work organization, and efforts to eradicate failures.”

If the only incidents that are investigated are those with customer impact, then these activities are by definition only lagging signals, not leading ones, representing a reactive stance to learning from incidents, and not a proactive one. In addition, organizations that take this view will not be able to use “near miss” events as productive events to learn from.

Severity as “quality” accounting index

In some organizations, the severity level for incidents is used as a sort of “primary key” index to sort and perform statistics on that are expected to represent the most important facets of incidents (FWIW, they don’t). In some cases, managers will construct charts and plots of Time-To-Resolve, Time-To-Detect, Frequency, etc. for each severity level, and some might calculate a moving average of this data.

Whether this data represents the value people believe it does, is a different story, but in situations where line-level managers are creating these spreadsheets and charts for presentation or delivery to higher levels of management, many of them report that they don’t have a clear understanding of the value of this exercise and that these calculations do not represent what upper management assumes they do.

Many of these chart-creators will continue to create these “shallow data” plots because while they don’t believe the data characterizes how “better” or “worse” their engineering teams are doing, doing so can serve as a tactic to garner upper management support (a.k.a. “blessed political ammunition”) for non-feature development work such as refactoring or relieving production pressure in various ways.

Seen in this light, “severity” could be seen as a political artifact that can be used to direct attention toward some areas of interest by those at the “sharp end” or away from other areas.

Severity as a qualitative measure of difficulty?

When talking to people outside of this industry who aren’t familiar with these norms listed above, some have reported that they assumed that severity level is proportional to how difficult it is for engineers to resolve or handle the incident. Clearly, this can’t be the case; some severity level definitions allow for a “sev 1” label to be applied to an event whose resolution was straightforward, and in our experience, it is not difficult to locate “sev 4” events that puzzle even the most experienced engineers for a long time.

We could then conclude…

- More often than not (we have seen some rare exceptions to this) “severity” is viewed as proportional to customer impact.

- If an event has some severity level attached to it, then it must be an “incident.” No sev level? Must not have been an incident.

- If something is an “incident” (has a severity level) then it will get some sort of attention.

- This attention can be from boots-on-the-ground practitioners, or distanced-from-details leadership/management.

- This attention can be seen as:

- positive (want more of it to further progress or agendas in fruitful directions) in which case higher sev level is seen as better, or

- negative (want less of it to reduce the scrutiny that management places on our areas of interest)

In any case, “severity” levels are not objective measures of anything in practice, even if they’re assumed to be so in theory.

They are negotiable constructs that provide an illusion of control or understanding, or footholds for people as they attempt to cope with complexity.

What does this leave us with, then? The point of this post — as with all the work we do at Adaptive Capacity Labs — is to take our observations on the messy, grounded, and real world of practice and shine some light on them. This post isn’t a comment on how the concept of severity levels should be used, it is a set of observations on how “severity levels” are actually being used.

What should you do with this information then, if this is not about giving advice or guidance?

Explore how severity levels are being used in your organization, especially after the incident is long over. Here are some ideas to mull over once you’ve done that, some suggestions in what ways severity levels may affect more than simply the intensity, harshness, or difficulty of an incident:

- Gather multiple incidents that have been “given” the same severity level, and look closely at them.

- Were they equally as difficult to diagnose? Or were some more challenging than others?

- Were they equally attended to by engineers or teams? Or were some responded to with a broader set of teams and expertise than others?

- Did they all result in the same quantity or quality of follow-up action items in their post-incident review? Or did that vary from case to case?

- Ask the people involved in responding to them for their perspective on how significant the events were for them. Did they respond all similarly, or did their responses vary?

- Can you find cases where people have open contrasting views (even debate) what severity level a given incident should be given?

- What downsides would people say are for raising the severity level of a given event?

- What downsides do people say exist for lowering the severity level of a given event?

- Is there a severity level threshold that will bring senior leadership scrutiny? Does such scrutiny match (proportionally) the concern that boots-on-the-ground have for the system(s) or areas involved?

- Do any explicit compensation incentives exist around incident metrics, influenced by severity level, such as bonuses, etc.?

Many of these less explicit purposes and dynamics surrounding severity levels (and most incident metrics, for that matter) are familiar to many readers.

Incidents create energy, and are agnostic as to how that energy is channeled and used in response. Understanding how (and by whom) it’s being used is critical to build expertise in learning from incidents. Otherwise: the elephants are in the room, and the emperor’s new clothes are seemingly stylish.